Humans can’t live without AI. Our brains won’t let that happen.

Recently Insomniac revealed that their next game (presumedly Marvel’s Spider-Man 2) will feature “very cool” new dialogue technology. Insomniac didn’t provide any further details, so my brain immediately went in a direction toward generative Artificial Intelligence (AI) because of course anything “new” and “very cool” must be commandeered by our future robot overlords. A few days later, head of Sony’s Independent Developer Initiative Shuhei Yoshida offered a few thoughts to GamesIndustry.biz about generative AI, saying “Those AI tools will be used in the future, not just for creating assets, but for animations, AI behaviour and even doing debug.”

To be frank, when I measure the possible good against the probable bad, I tend to fear what generative AI will bring to video games. AI has been part of games for a while, but mostly as a background design tool (texture generation, for example. Though texture generation is technically “procedural generation,” not AI. It’s a sliding scale: “procedural generation doesn’t typically use AI.”). We haven’t yet seen AI as a marquee feature. We don’t yet know how AI will influence the foreground parts of games like character movement, quest generation, or dialog.

I won’t pretend to know how designers will leverage AI for game design. I’m not that smart. But follow me as I allow my ignorance to breed fear for a moment.

Beyond the standard fears with AI—the loss of jobs for humans, mostly—I fear the loss of community.

Right now, in our human-curated gaming world, one of the great side effects of games is that multiple people are able to congregate around a shared experience. But when that experience is fractured into many (hundreds? millions?) of different experiences, how can a group of people gather ‘round the water cooler/campfire to discuss a single experience? Will the live event become the de facto experience? …

Live streaming will be the only way to share a game experience.

One possible output of using generative AI for the foreground parts of video games is that Twitch-style game live streaming will become an even greater component of video games than it is already (which is hard to imagine, I know).

Streaming will no longer be a way to share an experience between the streamer and other viewers but will become the only way to experience, with a group, a particular version of a game. If that streamer gets a side quest or a dialog option that nobody else will ever see, then the only way to become absorbed into the zeitgeist of a game is to be present during the micro-zeitgeist of a Tuesday evening streaming session.

I’m worried about this future. I’m busy on Tuesday evenings.

Whoa, Caleb! I think you are forgetting that generative AI isn’t necessarily something game designers will use as a real-time mechanic…

Generative AI creates the end product; it isn’t the end product.

Generative AI isn’t necessarily something that would be used during gameplay. In fact, much more likely (to Yoshida’s point), AI would be used during the development phase to create the assets that would then go into the final singular experience.

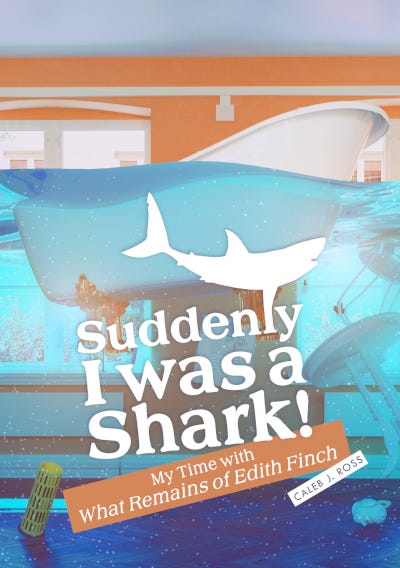

AI could be applicable during pre-production to ideate upon concept art. In fact, I’m guilty of using the AI tool MidJourney to help jumpstart my own creative solo brainstorm[1]A small brainstorm…maybe, a brain microburst? when designing the cover of my newest book Suddenly I was a Shark!: My Time with What Remains of Edith Finch (which releases June 12, 2023). Ultimately, I created a cover that was not informed at all by the MidJourney output, but my mind was still opened up to the possibilities of using generative AI to get the brain juices flowing.

[Best_Wordpress_Gallery id=”12″ gal_title=”MidJourney Cover Inspiration”]

For anyone who has ever been part of a brainstorming process, you know that kickstarting the process can be hard. Maybe we let AI kickstart it for us.

Whoa again, Caleb! I think you are also forgetting that most video games never offer a truly universal experience…

Games have rarely promised a single experience.

Games have always been procedural engines that rarely output a singular experience. The most well-known procedural generation type is the Roguelike genre which is entirely dependent upon randomly generated scenarios.

And beyond procedural generation as a genre mechanic, sometimes games—no matter the genre—have random enemy spawns and random enemy pathing that are based on the player character’s behavior which is, of course, subject to the whims of the (relatively randomly motivated) player.

There’s also a thing called RNG (random number generation) which is a stand-in term for how games randomize everything from loot drops to attack rates. Such things are technically random experiences.[2]Though practically speaking, they still mostly honor the plot points that inform the community conversation that I so cherish. In fact, under a powerful enough microscope, there is no singular experience.

In a video (featuring a much younger, baby-Caleb) titled “Are You Always Cheating in Video Games?” I argue that any deviation from a game designer’s intended mode is cheating, yet, paradoxically, it’s impossible for a game designer to insist upon a single mode. Therefore: no single experience.

Regarding the player’s adjustment of a difficulty setting, for example, I said: “…if the argument is that the normal, default mode is the correct, non-cheating mode, then playing either the easy mode or the hard mode is cheating [as either is a deviation from the intended play mode, despite one being more difficult].” Furthermore:

“By that logic, [players and critics] have a lot to demand from developers in order to agree on what is the default mode. You’ve got to look outside the game—not just difficulty settings, brightness level, effects volume, controller input map—but also how big your tv is, the comfort of your chair, the controller you are using, what food you’ve eaten…”

It’s impossible to build a critique around a single experience because a truly single experience is impossible. So, fearing a future of bespoke experiences is, maybe, possibly, just a little bit, okay(ish).

Whoa for the third time, Caleb! I think you just convinced yourself that generative AI has a place in video games that doesn’t need to be met with fear…

A bright side for those of us who sometimes appreciate a curious human over the human curiosity.

When I imagine a future in which plot-critical elements of a game are unique per player, then I see a less interesting world where fans of gaming, and fans of gaming conversation, are less served than they are now. The conversation about a game becomes impossible when there is no shared view of what the game is. This possibility is scary. I can’t be convinced otherwise.

Buuuuut, if I dismiss both the benefits that generative AI could bring to the ideation phase of game development, and the potential for a water-coolerless world, I do still see one more, small, yet highly speculative, benefit.[3]Though possibly just as a curiosity rather than as a practical win. And for what it’s worth, I believe curiosity is a net positive in and of itself. What happens, I wonder, when Death of the Author is fully realized, forcing criticism to abandon authorial intent?

What happens when a group of humans (gamers, museum-goers, concert audience members, etc) collectively discuss a piece of art, as art, that has no practical human source?

Let’s imagine generations of distance between the input and the output where the human context has been completely phased out of art’s creation. What happens then?

Does the audience become hyper-focused on aesthetics to the detriment of the art’s intellectual implications?

Is a “human seed” necessary to have an intellectual argument or can (must?) non-human art still be coerced into an intellectual argument? I mean, humans are the ones having this imagined argument, and so humans must adapt to any situation per the tools we have available, so what’s left but to accept that a human with a brain will see every nail as a subject to our brain-hammer?

Technology isn’t scary to those raised by technology.

I understand that I’m likely being very reductive. Nuance is hard to parse when the event horizon isn’t even visible. On a smaller scale, my paranoia is the same paranoia that drives every aging person ever. To fear the unknown is natural. So, I must constantly remind myself of a conversation I had years ago with author Stephen Graham Jones about the ebook’s (at that time impending) encroachment of paper books. I feared a generation that wouldn’t know the pleasures of physical books, a generation of people not knowing a paper book’s smell, of not knowing the feel of the paper at your fingertips when turning pages, of not looking down to the top of the book to see a bookmark marking your progress. Stephen argued that a new generation of readers will not lose those connections to books. Those connections will simply take on different forms. They will cherish the heat of an e-reader against their palms, the glow of its screen under covers, and the few seconds lag between touching the screen and seeing the next page flash into existence.

In other words, a future generation of gamers will love generative AI art for reasons we in the current generation cannot fathom.

The human is an incredibly adaptable creature. Our brains won’t let us live without intellectual and emotional attachments to art. With that in mind, I embrace my curiosity about what generative AI will bring to video games. Yes, despite how likely it might be that AI will drain the office water cooler, I’m curious about how the big-brained human will refill that cooler, because the human cannot exist without a full office cooler.

Footnotes

| ↑1 | A small brainstorm…maybe, a brain microburst? |

|---|---|

| ↑2 | Though practically speaking, they still mostly honor the plot points that inform the community conversation that I so cherish. |

| ↑3 | Though possibly just as a curiosity rather than as a practical win. And for what it’s worth, I believe curiosity is a net positive in and of itself. |